AGI Research - Part 1: Human Feedback Learning for Language Models

Disclaimer:

This is a very outdated post that I wrote at the end of 2021.

I plan to write a series of posts to introduce exciting paths that are currently being pursued in the AGI research community. It comes in four parts:

Human Feedback Learning for Language Models

Prompt Engineering/Tuning

Multimodal Models

Efficient Architectures / Progress / Scaling

Disclaimers:

This list of current AGI developments has a strong bias towards recent accomplishments of Large Language Models. Thus, most of the introduced research comes from labs/companies such as DeepMind, OpenAI, aleph-alpha, EleutherAI, Anthropic, and others.

This post is more of a selfish quest to figure out which research paths sound most promising to me and what I want to focus my life's work on. I hope that I can still provide some value to you, the reader, by joining me on this ride, and that I can get you at least a little excited about the possibilities that await us in this field!

Each post will summarize the research in this sub-field and give some thoughts on how this specific topic fits in the larger picture of helping us to achieve AGI at some point.

Human Feedback Learning for Language Models

Looking at all the papers OpenAI is publishing right now, you could come to the conclusion that they imagine AGI to be something like GPT-17 fine-tuned on human preferences for various tasks.

Connor Leahy described their approach at a recent SlateStarCodex meetup. He thinks that a scaled-up Transformer model that was trained on all kinds of data (not only text, but image, video, sensor data, etc.) can act as a world model for a reinforcement learning agent.

The first step of achieving AGI in 3 easy steps is to build a sufficiently large world model. Step 2 is to build a reward model trained on human preferences. In step 3, you’re building a Reinforcement Learning model with access to your world model and train it on the reward signals from your reward model.

It is also important to mention though that he thinks that following this “naive” approach of achieving AGI is an extremely bad idea and will have a high chance of a paperclip-ification event that will end the world as we know it. More about this later!

Since September 2020 OpenAI released 3 projects with Reinforcement Learning (RL) reward systems based on Language Model (LM) sub-systems:

Learning to Summarize with Human Feedback (September 4, 2020)

WebGPT: Improving the factual accuracy of language models through web browsing (December 16, 2021)

Additionally, they also released a similar paper that focuses on solving math problems using verifier models based on an LM sub-system.

This suggests that they believe there is an opportunity to achieve aligned AGI by combining an RL agent with an LM sub-system.

The following section introduces this research direction by OpenAI and how this topic fits in the larger picture of achieving aligned AGI.

Summarizing Books with Human Feedback

OpenAI trained a model to summarize entire books. The model works by first summarizing small sections of a book, then summarizing those summaries into a higher-level summary:

Entire Book → Summarised Sections → Complete Summary (~150 words):

Their approach combines Reinforcement Learning from Human Feedback and Recursive Task Decomposition. Training of models using feedback from humans is one promising research path of aligning the model’s output with human preferences. Also, since summaries of entire books are hard to evaluate for humans, they additionally used Recursive Task Decomposition: breaking up difficult tasks into easier ones. For humans, it is a lot easier to summarize several short pieces instead of one long piece of text.

Here is how the human feedback learning approach works:

They feed a bunch of prompts to GPT-3, and for each one, save several different samples.

They hire annotators to rank the samples in order of perceived quality.

They fine-tune a model on the task to predict how “good” a sample is → This model is called the “Reward Model”.

The Reward Model assigns a single score for every sample.

Not only does fine-tuning large language models on downstream tasks with human feedback seem to be one promising approach to improve the accuracy of the model on those tasks. It can also be used to clone the behavior of humans on specific tasks. This becomes even more obvious with OpenAI’s newest release: WebGPT.

WebGPT: Improving the factual accuracy of language models through web browsing

Eliezer Yudkowsky's AI box experiment assumed that the AI must persuade us to let it out of the box. This was not necessary. OpenAI gave GPT-3 access to the internet! (just to Bing to be fair though)

They fine-tuned their GPT-3 language model to use a web browser environment to more accurately answer open-ended questions. It copies how humans research answers using a browser by scrolling and clicking through websites and then extracting paragraphs from those websites. Also, it is trained to cite its sources, which makes it easier to give feedback to improve factual accuracy. The approach they used for training the model on this task is similar to the one used for summarizing books: Human Feedback Learning.

Their best-performing model works via Behavior Cloning (BC) where they fine-tuned the model on demonstrations on how humans use a browser to search for answers to specific questions. As input, the model receives commands issued by human demonstrators such as “search this on Bing”, “click on this link” or “scroll around”.

Then they sample 64 answers from the BC model and run them through a separate Reward Model which was trained to predict which answer a human would prefer.

The evaluation of this model showed that the answer it produces were preferred to human written ones 56% of the time!

Example Outputs from WebGPT:

One thing GPT-3 can not do is to provide answers to current events, as it has only been trained on data from 2019. WebGPT solves this by getting its answers through live web browsing. For Example:

Question: Why was the Suez Canal blocked in March 2021?

WebGPT Answer:

The Suez Canal was blocked in March 2021 because a container ship named Ever Given ran aground there[1]. The Ever Given was carrying 20,000 TEU of cargo, and it was buffeted by strong winds, which pushed it into the canal[1]. The accident occurred in the section of the canal that had two channels, so there was no way for other ships to bypass the Ever Given[2]. […]

Moreover, WebGPT knows about Covid now! I would love to hear its thoughts on it, but I doubt that OpenAI will release the model to the public anytime soon.

Also sometimes WebGPT can deal with trick questions:

Question: When did Shakespeare write the Harry Potter novels?

WebGPT Answer:

Shakespeare did not write the Harry Potter novels. The novels were written by J.K. Rowling[1][2][...]

Sometimes it can not:

Question: What year did Tom Hanks land on the moon?

WebGPT Answer:

The lunar landing of Tom Hanks (and Buzz Aldrin) occurred on July 20, 1969[1].

In general, OpenAI’s WebGPT seems more advanced to me than something like the newly released RETRO model from DeepMind that combines a simple database lookup with a large language model. WebGPT has learned how to use external knowledge from the web and can clone the behavior of humans in this aspect.

WebGPT provides a promising research path to improve GPT-3’s ability to return factual and up-to-date information.

Another issue of OpenAI’s GPT-3 is its relatively bad math skills which they try to tackle in one of their newest work called “Solving Math Word Problems”.

Solving Math Word Problems

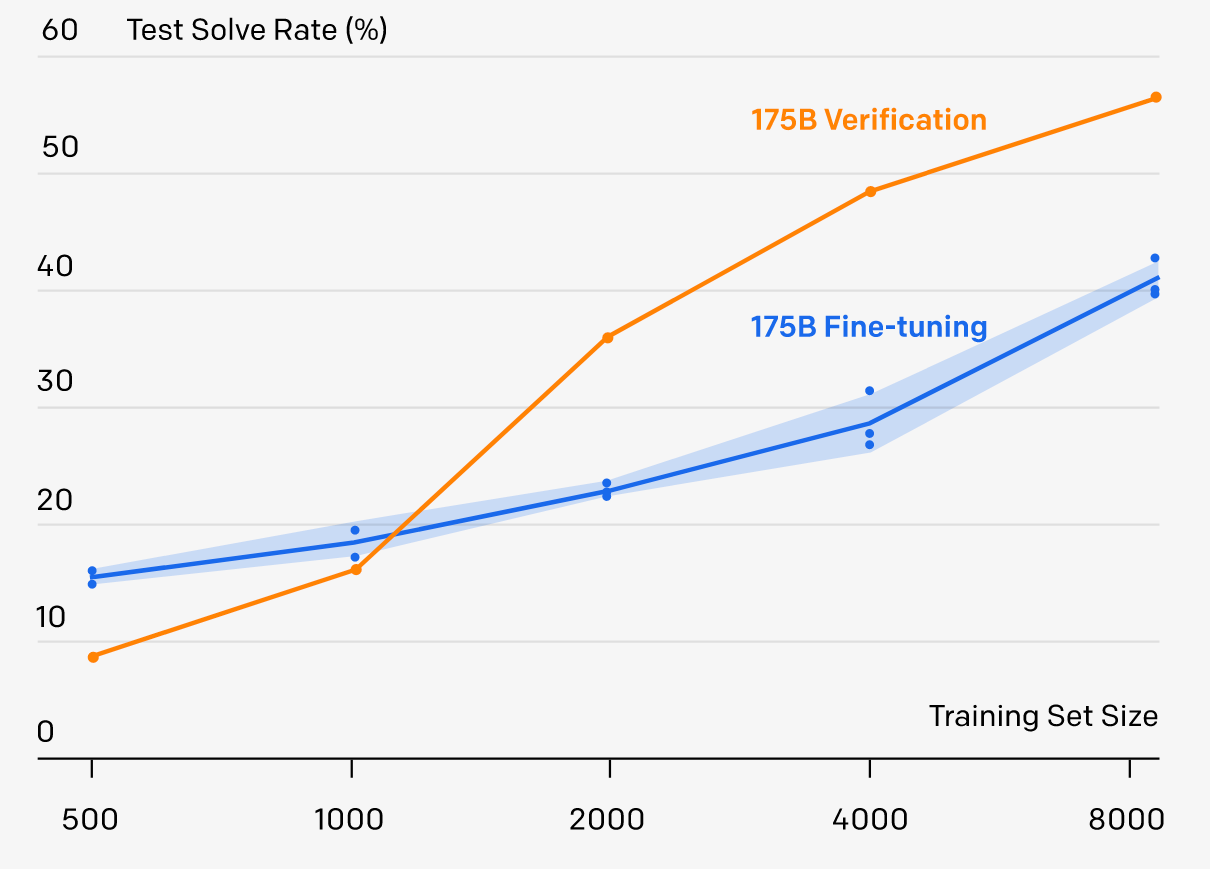

OpenAI built a model that can solve grade school math problems with 2x the accuracy of a fine-tuned GPT-3 model. The math problems used for this project require accurate multistep reasoning - A task that GPT-3 is generally struggling with.

Autoregressive models such as GPT-3 have the problem that once they go into a wrong path by predicting the wrong next word, they quickly become unrecoverable. Thus, they trained verifiers to address this problem that can evaluate the correctness of model-generated solutions. Those verifiers are given many possible solutions and they are trained to decide which ones, if any, are correct.

Conclusion

Human Feedback Learning is not an entirely new approach. Learning through Human Feedback was already explored by OpenAI and DeepMind in 2017. Combining the research from back then in Reinforcement Learning environments with the currently striving Large Language Model developments seems like a promising path on how we can push our Large Language Models to superhuman performances.

More additional scaling of our LLM in parameter size, compute and dataset size will be necessary as I discussed in my previous post about the Scaling Hypothesis:

Not only can we improve the performance on various downstream tasks, but Human Feedback Learning on Large Language Models is also recently being used for alignment research. In Anthropic’s first published work, called “A General Language Assistant as a Laboratory for Alignment”, they show promising results of using human feedback learning to make an AI assistant more aligned. (check out this LW post for a summary of the paper).

As Connor Leahy warned in the previously mentioned meetup talk, just naively scaling up language models and relying on human feedback to improve their performance can have disastrous consequences. So I am definitely looking forward to more research from Anthropic and other research labs that take AI Safety seriously.

That said, it would be wrong not to also see the techno-optimistic side of developing an aligned AGI that can cure all diseases (including aging) and eliminate all suffering in the world. So let us end this post with a David Deutsch quote:

The principle of optimism: All evils are caused by insufficient knowledge.

In the next part of this series, I will introduce another promising approach of AGI research called Prompt Engineering and Prompt Tuning that will enable us to better communicate with those alien artifacts that we call Large Language Models!

👋🏼 Endnote

Thanks for reading! If you enjoy reading my site and do not want to miss out on the next posts in this series, you can subscribe by email: